这篇教程Keras在mnist上的CNN实践,并且自定义loss函数曲线图操作写得很实用,希望能帮到您。

使用keras实现CNN,直接上代码:from keras.datasets import mnistfrom keras.models import Sequentialfrom keras.layers import Dense, Dropout, Activation, Flattenfrom keras.layers import Convolution2D, MaxPooling2Dfrom keras.utils import np_utilsfrom keras import backend as K class LossHistory(keras.callbacks.Callback): def on_train_begin(self, logs={}): self.losses = {'batch':[], 'epoch':[]} self.accuracy = {'batch':[], 'epoch':[]} self.val_loss = {'batch':[], 'epoch':[]} self.val_acc = {'batch':[], 'epoch':[]} def on_batch_end(self, batch, logs={}): self.losses['batch'].append(logs.get('loss')) self.accuracy['batch'].append(logs.get('acc')) self.val_loss['batch'].append(logs.get('val_loss')) self.val_acc['batch'].append(logs.get('val_acc')) def on_epoch_end(self, batch, logs={}): self.losses['epoch'].append(logs.get('loss')) self.accuracy['epoch'].append(logs.get('acc')) self.val_loss['epoch'].append(logs.get('val_loss')) self.val_acc['epoch'].append(logs.get('val_acc')) def loss_plot(self, loss_type): iters = range(len(self.losses[loss_type])) plt.figure() # acc plt.plot(iters, self.accuracy[loss_type], 'r', label='train acc') # loss plt.plot(iters, self.losses[loss_type], 'g', label='train loss') if loss_type == 'epoch': # val_acc plt.plot(iters, self.val_acc[loss_type], 'b', label='val acc') # val_loss plt.plot(iters, self.val_loss[loss_type], 'k', label='val loss') plt.grid(True) plt.xlabel(loss_type) plt.ylabel('acc-loss') plt.legend(loc="upper right") plt.show() history = LossHistory() batch_size = 128nb_classes = 10nb_epoch = 20img_rows, img_cols = 28, 28nb_filters = 32pool_size = (2,2)kernel_size = (3,3)(X_train, y_train), (X_test, y_test) = mnist.load_data()X_train = X_train.reshape(X_train.shape[0], img_rows, img_cols, 1)X_test = X_test.reshape(X_test.shape[0], img_rows, img_cols, 1)input_shape = (img_rows, img_cols, 1) X_train = X_train.astype('float32')X_test = X_test.astype('float32')X_train /= 255X_test /= 255print('X_train shape:', X_train.shape)print(X_train.shape[0], 'train samples')print(X_test.shape[0], 'test samples') Y_train = np_utils.to_categorical(y_train, nb_classes)Y_test = np_utils.to_categorical(y_test, nb_classes) model3 = Sequential() model3.add(Convolution2D(nb_filters, kernel_size[0] ,kernel_size[1], border_mode='valid', input_shape=input_shape))model3.add(Activation('relu')) model3.add(Convolution2D(nb_filters, kernel_size[0], kernel_size[1]))model3.add(Activation('relu')) model3.add(MaxPooling2D(pool_size=pool_size))model3.add(Dropout(0.25)) model3.add(Flatten()) model3.add(Dense(128))model3.add(Activation('relu'))model3.add(Dropout(0.5)) model3.add(Dense(nb_classes))model3.add(Activation('softmax')) model3.summary() model3.compile(loss='categorical_crossentropy', optimizer='adadelta', metrics=['accuracy']) model3.fit(X_train, Y_train, batch_size=batch_size, epochs=nb_epoch, verbose=1, validation_data=(X_test, Y_test),callbacks=[history]) score = model3.evaluate(X_test, Y_test, verbose=0)print('Test score:', score[0])print('Test accuracy:', score[1]) #acc-losshistory.loss_plot('epoch')补充:使用keras全连接网络训练mnist手写数字识别并输出可视化训练过程以及预测结果 前言mnist 数字识别问题的可以直接使用全连接实现但是效果并不像CNN卷积神经网络好。Keras是目前最为广泛的深度学习工具之一,底层可以支持Tensorflow、MXNet、CNTK、Theano 准备工作TensorFlow版本:1.13.1 Keras版本:2.1.6 Numpy版本:1.18.0 matplotlib版本:2.2.2 导入所需的库from keras.layers import Dense,Flatten,Dropoutfrom keras.datasets import mnistfrom keras import Sequentialimport matplotlib.pyplot as pltimport numpy as np Dense输入层作为全连接,Flatten用于全连接扁平化操作(也就是将二维打成一维),Dropout避免过拟合。使用datasets中的mnist的数据集,Sequential用于构建模型,plt为可视化,np用于处理数据。 划分数据集# 训练集 训练集标签 测试集 测试集标签(train_image,train_label),(test_image,test_label) = mnist.load_data()print('shape:',train_image.shape) #查看训练集的shapeplt.imshow(train_image[0]) #查看第一张图片print('label:',train_label[0]) #查看第一张图片对应的标签plt.show()输出shape以及标签label结果:

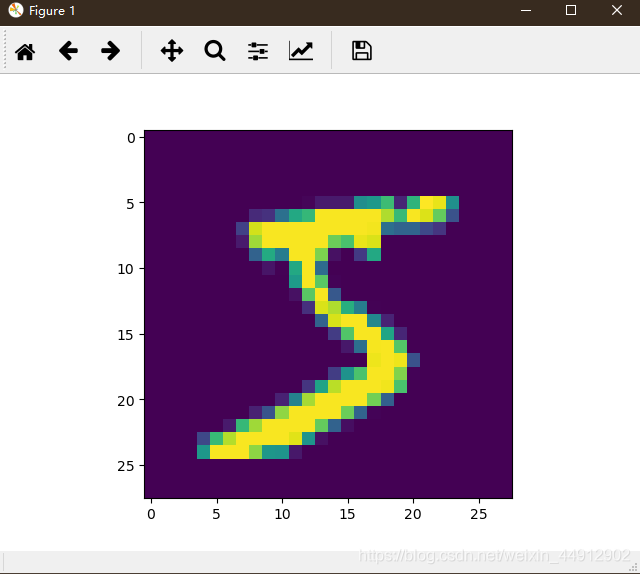

查看mnist数据集中第一张图片:

数据归一化train_image = train_image.astype('float32')test_image = test_image.astype('float32')train_image /= 255.0test_image /= 255.0将数据归一化,以便于训练的时候更快的收敛。 模型构建#初始化模型(模型的优化 ---> 增大网络容量,直到过拟合)model = Sequential()model.add(Flatten(input_shape=(28,28))) #将二维扁平化为一维(60000,28,28)---> (60000,28*28)输入28*28个神经元model.add(Dropout(0.1))model.add(Dense(1024,activation='relu')) #全连接层 输出64个神经元 ,kernel_regularizer=l2(0.0003)model.add(Dropout(0.1))model.add(Dense(512,activation='relu')) #全连接层model.add(Dropout(0.1))model.add(Dense(256,activation='relu')) #全连接层model.add(Dropout(0.1))model.add(Dense(10,activation='softmax')) #输出层,10个类别,用softmax分类 每层使用一次Dropout防止过拟合,激活函数使用relu,最后一层Dense神经元设置为10,使用softmax作为激活函数,因为只有0-9个数字。如果是二分类问题就使用sigmod函数来处理。 编译模型#编译模型model.compile( optimizer='adam', #优化器使用默认adam loss='sparse_categorical_crossentropy', #损失函数使用sparse_categorical_crossentropy metrics=['acc'] #评价指标) sparse_categorical_crossentropy与categorical_crossentropy的区别: sparse_categorical_crossentropy要求target为非One-hot编码,函数内部进行One-hot编码实现。 categorical_crossentropy要求target为One-hot编码。 One-hot格式如: [0,0,0,0,0,1,0,0,0,0] = 5 训练模型#训练模型history = model.fit( x=train_image, #训练的图片 y=train_label, #训练的标签 epochs=10, #迭代10次 batch_size=512, #划分批次 validation_data=(test_image,test_label) #验证集) 迭代10次后的结果:

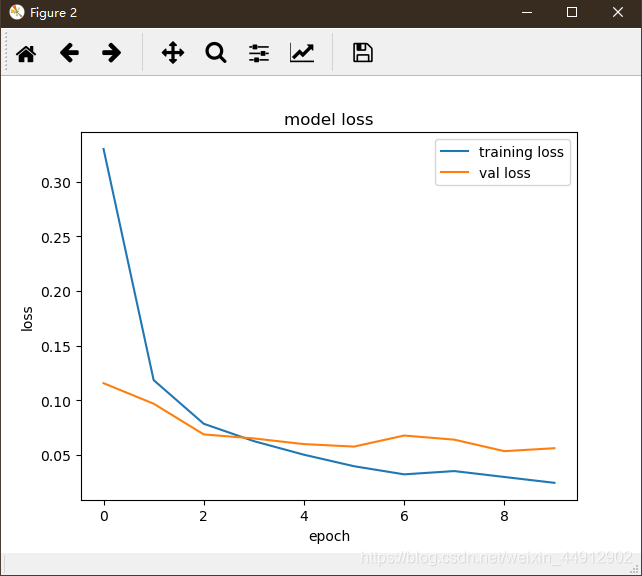

绘制loss、acc图#绘制loss acc图plt.figure()plt.plot(history.history['acc'],label='training acc')plt.plot(history.history['val_acc'],label='val acc')plt.title('model acc')plt.ylabel('acc')plt.xlabel('epoch')plt.legend(loc='lower right')plt.figure()plt.plot(history.history['loss'],label='training loss')plt.plot(history.history['val_loss'],label='val loss')plt.title('model loss')plt.ylabel('loss')plt.xlabel('epoch')plt.legend(loc='upper right')plt.show()绘制出的loss变化图:

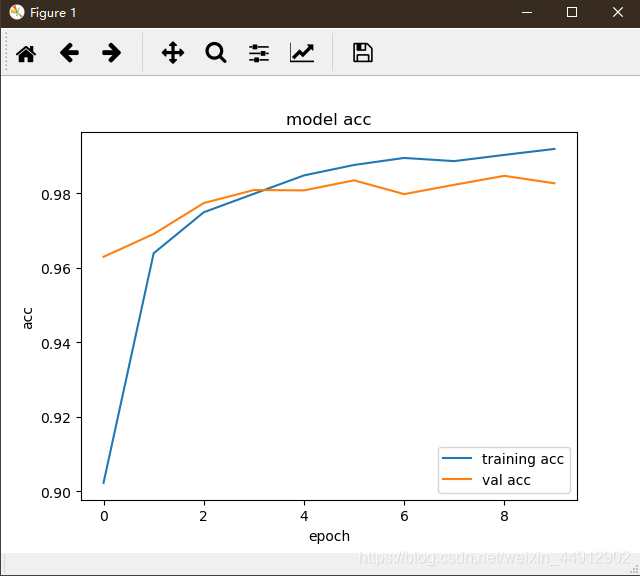

绘制出的acc变化图:

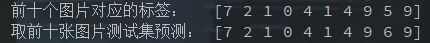

预测结果print("前十个图片对应的标签: ",test_label[:10]) #前十个图片对应的标签print("取前十张图片测试集预测:",np.argmax(model.predict(test_image[:10]),axis=1)) #取前十张图片测试集预测打印的结果:

可看到在第9个数字预测错了,标签为5的,预测成了6,为了避免这种问题可以适当的加深网络结构,或使用CNN模型。 保存模型model.save('./mnist_model.h5')完整代码from keras.layers import Dense,Flatten,Dropoutfrom keras.datasets import mnistfrom keras import Sequentialimport matplotlib.pyplot as pltimport numpy as np# 训练集 训练集标签 测试集 测试集标签(train_image,train_label),(test_image,test_label) = mnist.load_data()# print('shape:',train_image.shape) #查看训练集的shape# plt.imshow(train_image[0]) #查看第一张图片# print('label:',train_label[0]) #查看第一张图片对应的标签# plt.show()#归一化(收敛)train_image = train_image.astype('float32')test_image = test_image.astype('float32')train_image /= 255.0test_image /= 255.0#初始化模型(模型的优化 ---> 增大网络容量,直到过拟合)model = Sequential()model.add(Flatten(input_shape=(28,28))) #将二维扁平化为一维(60000,28,28)---> (60000,28*28)输入28*28个神经元model.add(Dropout(0.1))model.add(Dense(1024,activation='relu')) #全连接层 输出64个神经元 ,kernel_regularizer=l2(0.0003)model.add(Dropout(0.1))model.add(Dense(512,activation='relu')) #全连接层model.add(Dropout(0.1))model.add(Dense(256,activation='relu')) #全连接层model.add(Dropout(0.1))model.add(Dense(10,activation='softmax')) #输出层,10个类别,用softmax分类#编译模型model.compile( optimizer='adam', loss='sparse_categorical_crossentropy', metrics=['acc'])#训练模型history = model.fit( x=train_image, #训练的图片 y=train_label, #训练的标签 epochs=10, #迭代10次 batch_size=512, #划分批次 validation_data=(test_image,test_label) #验证集)#绘制loss acc 图plt.figure()plt.plot(history.history['acc'],label='training acc')plt.plot(history.history['val_acc'],label='val acc')plt.title('model acc')plt.ylabel('acc')plt.xlabel('epoch')plt.legend(loc='lower right')plt.figure()plt.plot(history.history['loss'],label='training loss')plt.plot(history.history['val_loss'],label='val loss')plt.title('model loss')plt.ylabel('loss')plt.xlabel('epoch')plt.legend(loc='upper right')plt.show()print("前十个图片对应的标签: ",test_label[:10]) #前十个图片对应的标签print("取前十张图片测试集预测:",np.argmax(model.predict(test_image[:10]),axis=1)) #取前十张图片测试集预测#优化前(一个全连接层(隐藏层))#- 1s 12us/step - loss: 1.8765 - acc: 0.8825# [7 2 1 0 4 1 4 3 5 4]# [7 2 1 0 4 1 4 9 5 9]#优化后(三个全连接层(隐藏层))#- 1s 14us/step - loss: 0.0320 - acc: 0.9926 - val_loss: 0.2530 - val_acc: 0.9655# [7 2 1 0 4 1 4 9 5 9]# [7 2 1 0 4 1 4 9 5 9]model.save('./model_nameALL.h5')总结使用全连接层训练得到的最后结果train_loss: 0.0242 - train_acc: 0.9918 - val_loss: 0.0560 - val_acc: 0.9826,由loss acc可视化图可以看出训练有着明显的效果。 以上为个人经验,希望能给大家一个参考,也希望大家多多支持51zixue.net。

python编写五子棋游戏

Python中的Nonetype类型怎么判断 |