最近想做一个关于中文OCR的小系列,也是对之前做的东西的一个总结。作为这个系列的第一篇,我觉得还是有必要说一下关于中文字符图片分割的问题。因为现在开源的OCR代码比较多,相对而言对字符图片的分割的方法提的比较少,尤其是中文字符图片的分割还是有一定困难在里面的。

一、普遍使用的切割方法

现在大部分开源项目上用切割方法还是基于水平、垂直投影字符切割方法。

原理比较简单容易理解,也比较容易实现。先对一个文本图片进行水平投影,得到图片在垂直方向上的像素分布,有像素存在的区域即为文本所在区域;将该行文本切割下来,再对该行文本图片进行垂直投影,得到水平方向的像素分布,同理有像素存在的区域基本字符所在区域。

from PIL import Image

import numpy as np

min_thresh = 2 #字符上最少的像素点

min_range = 5 #字符最小的宽度

def vertical(img_arr):

h,w = img_arr.shape

ver_list = []

for x in range(w):

ver_list.append(h - np.count_nonzero(img_arr[:, x]))

return ver_list

def horizon(img_arr):

h,w = img_arr.shape

hor_list = []

for x in range(h):

hor_list.append(w - np.count_nonzero(img_arr[x, :]))

return hor_list

def OTSU_enhance(img_gray, th_begin=0, th_end=256, th_step=1):

max_g = 0

suitable_th = 0

for threshold in xrange(th_begin, th_end, th_step):

bin_img = img_gray > threshold

bin_img_inv = img_gray <= threshold

fore_pix = np.sum(bin_img)

back_pix = np.sum(bin_img_inv)

if 0 == fore_pix:

break

if 0 == back_pix:

continue

w0 = float(fore_pix) / img_gray.size

u0 = float(np.sum(img_gray * bin_img)) / fore_pix

w1 = float(back_pix) / img_gray.size

u1 = float(np.sum(img_gray * bin_img_inv)) / back_pix

# intra-class variance

g = w0 * w1 * (u0 - u1) * (u0 - u1)

if g > max_g:

max_g = g

suitable_th = threshold

return suitable_th

def cut_line(horz, pic):

begin, end = 0, 0

w, h = pic.size

cuts=[]

for i,count in enumerate(horz):

if count >= min_thresh and begin == 0:

begin = i

elif count >= min_thresh and begin != 0:

continue

elif count <= min_thresh and begin != 0:

end = i

#print (begin, end), count

if end - begin >= 2:

cuts.append((end - begin, begin, end))

begin = 0

end = 0

continue

elif count <= min_thresh or begin == 0:

continue

cuts = sorted(cuts, reverse=True)

if len(cuts) == 0:

return 0, False

else:

if len(cuts) > 1 and cuts[1][0] in range(int(cuts[0][0] * 0.8), cuts[0][0]):

return 0, False

else:

crop_ax = (0, cuts[0][1], w, cuts[0][2])

img_arr = np.array(pic.crop(crop_ax))

return img_arr, True

def simple_cut(vert):

begin, end = 0,0

cuts = []

for i,count in enumerate(vert):

if count >= min_thresh and begin == 0:

begin = i

elif count >= min_thresh and begin != 0:

continue

elif count <= min_thresh and begin != 0:

end = i

#print (begin, end), count

if end - begin >= min_range:

cuts.append((begin, end))

begin = 0

end = 0

continue

elif count <= min_thresh or begin == 0:

continue

return cuts

pic_path = './test.jpg'

save_path = './SplitChar/'

src_pic = Image.open(pic_path).convert('L')

src_arr = np.array(src_pic)

threshold = OTSU_enhance(src_arr)

bin_arr = np.where(src_arr < threshold, 0, 255) #先用大津阈值将图片二值化处理

horz = horizon(bin_arr) #获取到文本 水平 方向的投影

line_arr, flag = cut_line(horz, src_pic) #把文字(行)所在的位置切割下来

if flag == False: #如果flag==False 说明没有切到一行文字

exit()

line_arr = np.where(line_arr < threshold, 0, 255)

line_img = Image.fromarray((255 - line_arr).astype("uint8"))

width, height = line_img.size

vert = vertical(line_arr) #获取到该行的 垂直 方向的投影

cut = simple_cut(vert) #直接对目标行进行切割

for x in range(len(cut)):

ax = (cut[x][0] - 1, 0, cut[x][1] + 1, height)

temp = line_img.crop(ax)

temp = image_unit.resize(temp, save_size)

temp.save('{}/{}.jpg'.format(save_path, x))

因为在切割的时候,根据手动设置的字符宽度和、字符间隙宽度的阈值进行分割,所以存在以下几个的问题:

1、对字符排列密集的图片分割效果差

2、对左右结构的字,且左右结构缝隙比较大如“川”

3、对一部分垂直投影占得像素点比较少的字符,可以会认为是噪声,比如“上”

4、需要手动设置阈值,阈值不能对所有文本适用

5、对中英文、标点、数字的混合文本处理十分不好

二、统计分割

上面的方法的缺陷在于它的阈值选择上,如何分割字符的问题就变成了,选择什么样的阈值去判断字符宽度和两个字符的间隙。其实一开始我也想到过用网络直接去定位一块一块字符,但实际操作了一下,精确度着实不高,而且会影响速度。

所以有如下几个任务:

1、自动选择阈值去判断什么样的宽度是一个完整的字,什么样的宽度是一个偏旁部首

2、如果是偏旁,如何与另一个偏旁相连

3、如何是标点或是数字、英文怎么办呢?它们的宽度和偏旁是类似的

那么只需要在上面的基础上稍作一下修改,找出特定的阈值,从而判断是什么部分,作出什么操作就OK

from sklearn.cluster import KMeans

def isnormal_width(w_judge, w_normal_min, w_normal_max):

if w_judge < w_normal_min:

return -1

elif w_judge > w_normal_max:

return 1

else:

return 0

def cut_by_kmeans(pic_path, save_path, save_size):

src_pic = Image.open(pic_path).convert('L') #先把图片转化为灰度

src_arr = np.array(src_pic)

threshold = OTSU_enhance(src_arr) * 0.9 #用大津阈值

bin_arr = np.where(src_arr < threshold, 0, 255) #二值化图片

horz = horizon(bin_arr) #获取到该行的 水平 方向的投影

line_arr, flag = cut_line(horz, src_pic) #把文字(行)所在的位置切割下来

if flag == False:

return flag

line_arr = np.where(line_arr < threshold, 0, 255)

line_img = Image.fromarray((255 - line_arr).astype("uint8"))

width, height = line_img.size

vert = vertical(line_arr) #获取到该行的 垂直 方向的投影

cut = simple_cut(vert) #先进行简单的文字分割(即有空隙就切割)

#cv.line(img,(x1,y1), (x2,y2), (0,0,255),2)

width_data = []

width_data_TooBig = []

width_data_withoutTooBig = []

for i in range(len(cut)):

tmp = (cut[i][1] - cut[i][0], 0)

if tmp[0] > height * 1.8: #比这一行的高度大两倍的肯定是连在一起的

temp = (tmp[0], i)

width_data_TooBig.append(temp)

else:

width_data_withoutTooBig.append(tmp)

width_data.append(tmp)

kmeans = KMeans(n_clusters=2).fit(width_data_withoutTooBig)

#print "聚簇中心点:", kmeans.cluster_centers_

#print 'label:', kmeans.labels_

#print '方差:', kmeans.inertia_

label_tmp = kmeans.labels_

label = []

j = 0

k = 0

for i in range(len(width_data)): #将label整理,2代表大于一个字的

if j != len(width_data_TooBig) and k != len(label_tmp):

if i == width_data_TooBig[j][1]:

label.append(2)

j = j + 1

else:

label.append(label_tmp[k])

k = k + 1

elif j == len(width_data_TooBig) and k != len(label_tmp):

label.append(label_tmp[k])

k = k + 1

elif j != len(width_data_TooBig) and k == len(label_tmp):

label.append(2)

j = j + 1

label0_example = 0

label1_example = 0

for i in range(len(width_data)):

if label[i] == 0:

label0_example = width_data[i][0]

elif label[i] == 1:

label1_example = width_data[i][0]

if label0_example > label1_example: #找到正常字符宽度的label(宽度大的,防止切得太碎导致字符宽度错误)

label_width_normal = 0

else:

label_width_normal = 1

label_width_small = 1 - label_width_normal

cluster_center = []

cluster_center.append(kmeans.cluster_centers_[0][0])

cluster_center.append(kmeans.cluster_centers_[1][0])

for i in range(len(width_data)):

if label[i] == label_width_normal and width_data[i][0] > cluster_center[label_width_normal] * 4 / 3:

label[i] = 2

temp = (width_data[i][0], i)

width_data_TooBig.append(temp)

max_gap = get_max_gap(cut)

for i in range(len(label)):

if i == max_gap[1]:

label[i] = 3

width_normal_data = [] #存正常字符宽度

width_data_TooSmall = []

for i in range(len(width_data)):

if label[i] == label_width_normal:

width_normal_data.append(width_data[i][0])

elif label[i] != label_width_normal and label[i] != 2 and label[i] != 3: #切得太碎的

#box=(cut[i][0],0,cut[i][1],height)

#region=line_img.crop(box) #此时,region是一个新的图像对象。

#region_arr = 255 - np.array(region)

#region = Image.fromarray(region_arr.astype("uint8"))

#name = "single"+str(i)+".jpg"

#region.save(name)

tmp = (width_data[i][0], i)

width_data_TooSmall.append(tmp)

width_normal_max = max(width_normal_data)

width_normal_min = min(width_normal_data) #得到正常字符宽度的上下限

if len(width_data_TooBig) != 0:

for i in range(len(width_data_TooBig)):

index = width_data_TooBig[i][1]

mid = (cut[index][0] + cut[index][1]) / 2

tmp1 = (cut[index][0], int(mid))

tmp2 = (int(mid)+1, cut[index][1])

del cut[index]

cut.insert(index, tmp2)

cut.insert(index, tmp1)

del width_data[index]

tmp1 = (tmp1[1] - tmp1[0], index)

tmp2 = (tmp2[1] - tmp2[0], index+1)

width_data.insert(index, tmp2)

width_data.insert(index, tmp1)

label[index] = label_width_normal

label.insert(index, label_width_normal)

if len(width_data_TooSmall) != 0: #除':'以外有小字符,先找'('、')'label = 4

for i in range(len(width_data_TooSmall)):

index = width_data_TooSmall[i][1]

border_left = cut[index][0] + 1

border_right = cut[index][1]

RoI_data = line_arr[:,border_left:border_right]

#RoI_data = np.where(RoI_data < threshold, 0, 1)

horz = horizon(RoI_data)

up_down = np.sum(np.abs(RoI_data - RoI_data[::-1]))

left_right = np.sum(np.abs(RoI_data - RoI_data[:,::-1]))

vert = vertical(RoI_data)

if up_down <= left_right * 0.6 and np.array(vert).var() < len(vert) * 2:

#print i, up_down, left_right,

#print vert, np.array(vert).var()

label[index] = 4

index_delete = [] #去掉这些index右边的线

cut_final = []

width_untilnow = 0

for i in range(len(width_data)):

if label[i] == label_width_small and width_untilnow == 0:

index_delete.append(i)

cut_left = cut[i][0]

width_untilnow = cut[i][1] - cut[i][0]

#print cut_left,width_untilnow,i

elif label[i] != 3 and label[i] != 4 and width_untilnow != 0:

width_untilnow = cut[i][1] - cut_left

if isnormal_width(width_untilnow, width_normal_min, width_normal_max) == -1: #还不够长

index_delete.append(i)

#print cut_left,width_untilnow,i

elif isnormal_width(width_untilnow, width_normal_min, width_normal_max) == 0: #拼成一个完整的字

width_untilnow = 0

cut_right = cut[i][1]

tmp = (cut_left, cut_right)

cut_final.append(tmp)

#print 'complete',i

elif isnormal_width(width_untilnow, width_normal_min, width_normal_max) == 1: #一下子拼多了

#print 'cut error!!!!',cut_left,width_untilnow,i

width_untilnow = 0

cut_right = cut[i-1][1]

tmp = (cut_left, cut_right)

cut_final.append(tmp)

#print 'cut error!!!!',i

index_delete.append(i)

cut_left = cut[i][0]

width_untilnow = cut[i][1] - cut[i][0]

if i == len(width_data):

tmp = (cut[i][0], cut[i][1])

cut_final.append(tmp)

#print i

else:

tmp = (cut[i][0], cut[i][1])

cut_final.append(tmp)

i1 = len(cut_final) - 1

i2 = len(cut) - 1

if cut_final[i1][1] != cut[i2][1]:

tmp = (cut[i2][0], cut[i2][1])

cut_final.append(tmp)

else:

cut_final = cut

for x in range(len(cut_final)):

ax = (cut_final[x][0] - 1, 0, cut_final[x][1] + 1, height)

temp = line_img.crop(ax)

temp = image_unit.resize(temp, save_size)

temp.save('{}/{}.jpg'.format(save_path, x))

return flag

这里用了一下sklearn这个机器学习库里面的Kmeans来进行分类。

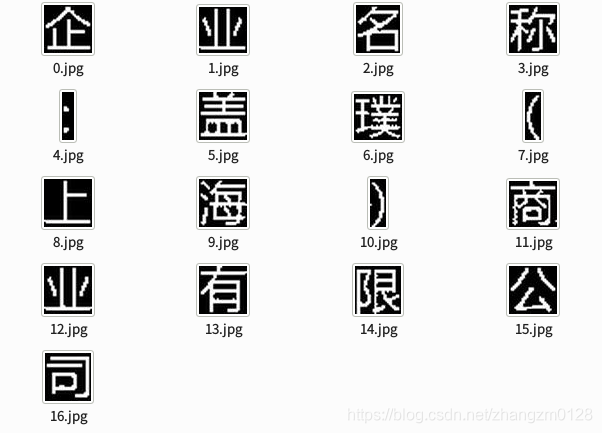

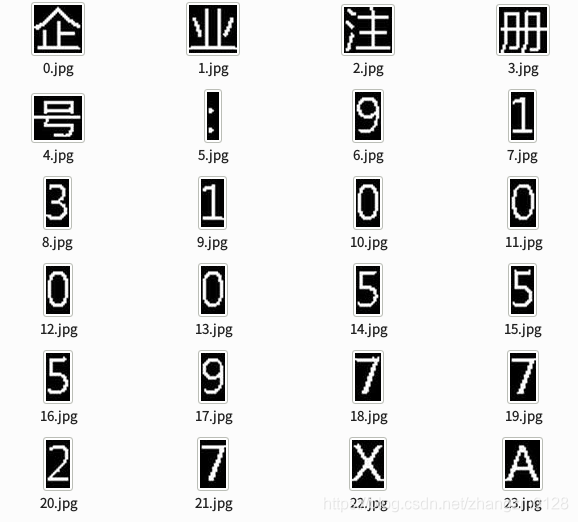

(a)直接分割的结果 (b)用Kmeans分割的结果